If All You've Is LLMs, Everything Looks Like a Gen AI Project: A case for SLMs and Classic ML

We've a problem in the tech world. Many teams get carried away and reach for LLMs when all they need is a straightforward classifier or regression model. Many engineering teams are sticking LLM to every problem at hand, as hooking up OpenAI API with existing systems is as easy as ABCs. Sometimes, it's just a bad choice, other times, it's to showcase Gen AI capabilities to their clients or bosses.

Do you remember the Law of the instrument by Maslow? It goes like this: if all you have is a hammer, everything looks like a nail.

In the world of AI, if all you have is LLM, everything looks like a Gen AI problem.

Why is this happening? Most organizations have trained their software engineering teams in Gen AI, and OpenAI APIs - so there is a certain cognitive bias to slap Gen AI to every problem.

It's important to understand available tools and options in the world of Artificial Intelligence - starting from classical machine-learning tools to Gen AIs. Knowing what solutions are available, can help teams in choosing the right solution for their business problems. More importantly it's important to understand the difference between Gen AI and traditional ML approaches, so let's start there:

Generative AI vs. Traditional Machine Learning

Think of traditional machine learning as a calculator: fast, precise, and built to solve specific equations. It thrives on structured inputs: numbers in rows and columns, and produces clear, deterministic answers like “Is this transaction fraudulent?” or “What’s tomorrow’s demand forecast?”. Generative AI, on the other hand, is more like a storyteller: it can take a prompt in natural language and create something new, a draft email, a block of code, a product description, even an image. ML models predict outcomes within rigid frameworks, where as, Gen AI models generate outputs in open-ended contexts. Both have their place: ML provides accuracy and efficiency for structured problems, while GenAI brings flexibility, reasoning, and creativity to unstructured ones. Choosing the right one, will save you and help you scale your solutions.

Most Gen AI models of today, using the Transformers approach introduced in 2017. We are now seeing the advent of SLMs (Small Language Models) - which are much smaller in size and are meant to replace LLMs for specific use cases.

OpenAI itself is using a smart strategy starting with GPT 5 to select the right model based on user's input. Grok was the first such AI to auto-select the right model based on user's input.

Grok now automatically decides how much to think about your question!

— Elon Musk (@elonmusk) July 26, 2025

You can override “Auto” mode and force heavy thinking at will with one tap. https://t.co/lCU0YI1ALq

How Open AI's is Reducing Cost with GPT 5

Reducing inference cost was a key objective for OpenAI when developing GPT-5. While the new model offers major performance upgrades in reasoning and reliability, its architecture was specifically designed to be more resource-efficient than previous models, particularly for common use cases.

- Hybrid multi-model architecture: Unlike older models that used a single, large model for all requests, GPT-5 uses an intelligent router that directs a query to the appropriate model based on its complexity.

- Simple requests are handled by faster, smaller, and cheaper variants like

gpt-5-miniorgpt-5-nano. - More complex queries that require deep reasoning are routed to the more powerful "GPT-5 Thinking" model.

- Simple requests are handled by faster, smaller, and cheaper variants like

- Adaptive compute allocation: This design allows OpenAI to allocate the necessary compute power for a task without wasting resources on simple requests. For instance,

gpt-5-miniis significantly faster and cheaper for simpler tasks, while the fullgpt-5model is used for maximum performance. - Higher efficiency per output: For complex coding, reasoning, and scientific tasks, GPT-5 is not only more accurate but also more efficient. For example, it uses 22% fewer output tokens and 45% fewer tool calls compared to the

o3model for coding benchmarks. - New data type: The use of an MXFP4 data type has reportedly reduced memory, bandwidth, and compute requirements by up to 75% compared to the BF16 format used in earlier models.

Generative AI: Introducing SLMs

Imagine you’re orchestrating a streamlined factory floor. Every tool at hand has a specific job: pick, place, weld. The tool is not chosen for sheer power, but for its reliability, precision, and efficiency. That’s the future NVIDIA sees for agentic AI, with Small Language Models (SLMs) as the nimble, purpose-built workhorses.

NVIDIA’s Bold Vision

NVIDIA’s recent paper, “Small Language Models Are the Future of Agentic AI”, isn’t just rhetoric, it’s a challenge to the “bigger-is-better” mindset. Their argument rests on three clear pillars:

- SLMs Are Capable Enough: Most agentic systems involve repetitive, narrowly scoped tasks, think intent parsing, template generation, or tool orchestration. For those, fine-tuned SLMs (<10B parameters) can match or even outperform large LLMs. Their nimbleness isn’t a compromise, it’s a feature.

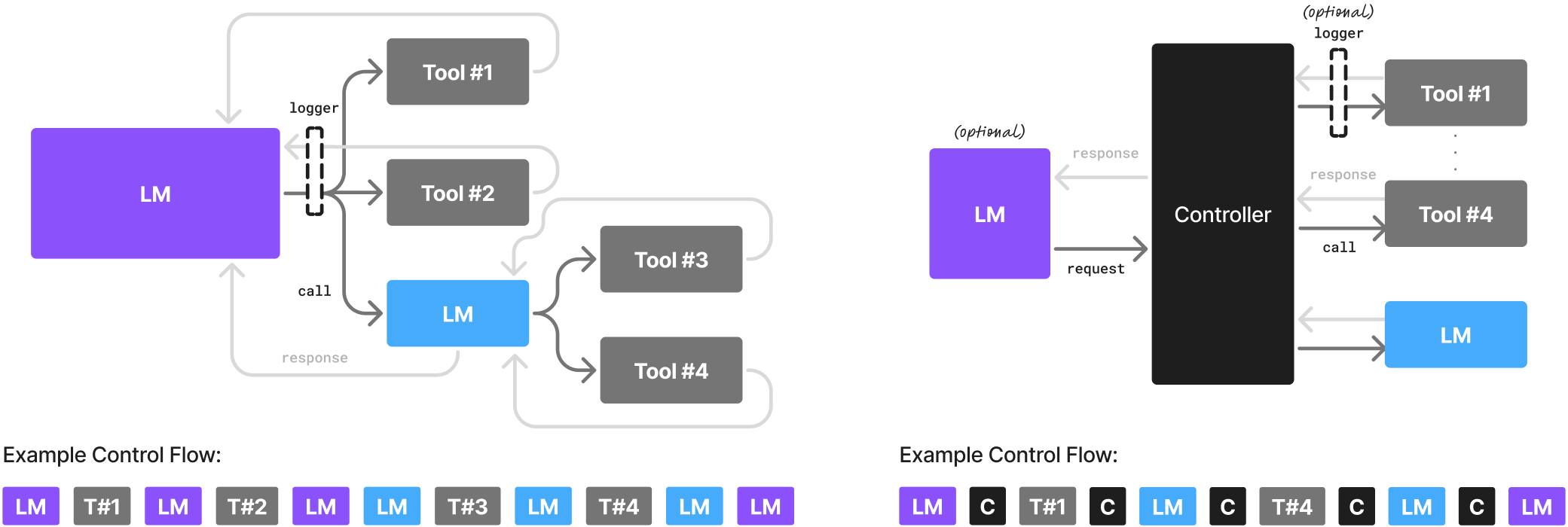

- Operational Fit for Modular Systems: The real magic lies in modularity. Think of agents as orchestral conductors, delegating the heavy thinking to big models only when needed. SLMs fill in for routine work, faster, more predictable, and far less prone to hallucinations and formatting errors.

- Economics that Scale: Running LLMs at scale can bleed budgets dry. SLMs cut costs by 10×–30× per token in many cases. Faster inference, fewer resources, and the agility to fine-tune overnight instead of over weeks? That’s a real business advantage.

SLMs Just Might Be What The Doctor Ordered

SLMs (Small Language Models) are still LLMs in architecture (transformer-based, trained on text), but:

- They’re smaller in parameter size (hundreds of millions to a few billion vs 70B+).

- They’re designed for efficiency, reliability, and narrow scope, not universal reasoning.

- They are part of Generative AI because they generate or transform text, code, or structured data, but in a more controlled, domain-tuned way.

Think of them as GenAI’s little cousins: still capable of “generation,” but optimized for specific tasks rather than broad open-ended creativity.

Here are some real-world scenarios where SLMs give you the perfect fit, and when reaching for an LLM still makes sense:

Use Cases for SLMs:

- Intent Classification in Automation: Sorting emails into categories based on keywords and structure. Overkill for LLMs.

- JSON Output for Tool Calls: Generating API call payloads that must strictly conform to schema. SLMs can be trained to never deviate.

- Routine Summaries: “Summarize the latest ticket updates.” The structure is fixed; context is narrow, ideal for SLM efficiency.

- Edge Deployments: Privacy-critical applications on local hardware (e.g. ChatRTX). SLMs run fast, discreetly, and cost-effectively.

Use Cases for LLMs:

- Strategic Planning & Complex Reasoning: Asking an agent to plan a launch campaign or market strategy. You need deep, multifaceted reasoning that only a big model can offer.

- Creative, Open-Ended Dialogue: For assistance, brainstorming, or summarizing across domains, a generalist model is necessary.

- Cross-Task Generalization: When agents must interpret new commands or domains they weren’t explicitly trained for, LLMs provide the adaptability.

What about a Hybrid? May be, we will soon learn where an hybrid might be well-suited. OpenAI is already using it for GPT 5 - but remember their tool is designed to be used by developers with various use cases.

NVIDIA’s stance doesn’t diminish LLMs. It’s like saying, “you don’t bring a knife to a gun-fight”.

This isn’t a crazy idea, it’s making a smart, economical decision. By championing SLMs, NVIDIA is showing that intelligent design can bring AI into the hands of more people, faster, cheaper, smarter.

Use Cases for Classic ML applications

Guess what? You probably don't even need SLM for many use cases. Here are examples of simple ML projects that don’t need LLMs or even SLMs, just good old-fashioned traditional/classical machine learning:

Everyday Applications

- Handwritten Digit Recognition (MNIST): A staple for beginners, logistic regression, SVM, or shallow neural nets.

- Face Recognition (Binary Classification): Is this person in the authorized dataset or not?

- Voice Command Detection: Classify short audio clips into “yes/no/stop/go” categories, simple CNN or MFCC features + Random Forest.

Transportation & IoT

- Predictive Maintenance: Classify whether a machine needs servicing soon, based on sensor data.

- Traffic Flow Classification: Identify if congestion is “low, medium, high” from camera or sensor counts.

- Energy Consumption Forecasting: Regression models, not generative AI.

Retail & Business

- Customer Churn Prediction: Given transaction history, classify whether a customer is likely to churn.

- Fraud Detection: Spot fraudulent transactions using anomaly detection or ensemble classifiers.

- Recommendation Starter: “People who bought X also bought Y”, matrix factorization, not an LLM, solves this elegantly.

Email & Document Classification

- Spam Detection: Classic supervised classification problem, Naive Bayes, logistic regression, or gradient boosting often outperform heavier models.

- Document Type Classifier: Is this a résumé, invoice, or contract? A bag-of-words or TF-IDF + SVM setup works beautifully.

- Sentiment Analysis: For “positive/negative/neutral” on structured product reviews, small ML models are fast and reliable.

Healthcare & Diagnostics

- Disease Prediction from Lab Results: Predict diabetes, anemia, or heart risk from structured lab test data. Logistic regression or decision trees suffice.

- Image-Based Screening: Classifying X-rays into “normal” vs “abnormal” using a CNN trained on small datasets. No need for LLM reasoning.

Final Thoughts

SLMs and Classic ML applications don’t just save you money, they empower you to build faster, think smarter, and scale higher. The future of agentic AI isn’t about one giant brain, it’s about a symphony of right-sized specialists, each playing its part.

Again, I've always been a proponent of not going for an overkill. Not everyone needs a GPU farm or data-center with thousands of powerful GPU pods. In fact, I've argued that as software and hardware become more performant, these heavy investments of today, will not matter at all. Coming up with groundbreaking solutions doesn't need sheer power, but smart & frugal decisions by engineers and managers working in AI.

Choosing the right AI approach can be challenging. If you’d like to explore what fits your needs best, connect with me on LinkedIn.